Science, as everybody knows, is immersed in a crisis of reproducibility. The crisis was launched in 2005 when John Ioannidis of Stanford University published his now famous paper, “Why most published research findings are false,” in which he showed that, by the manipulation of statistical conventions, most published research findings are indeed false.

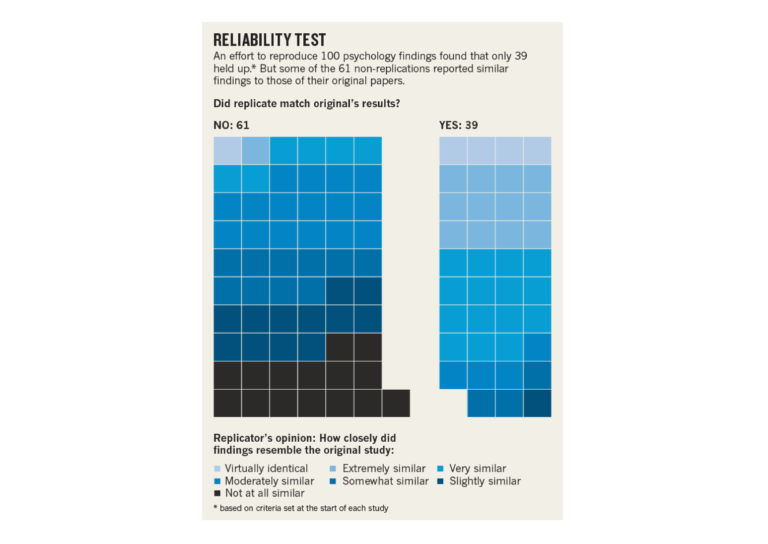

This unfortunate finding was confirmed by Brian Nosek and his colleagues at the University of Virginia in 2015. On trying to replicate 100 psychological experiments that had been published in good journals, they discovered that only 39 of the 100 replications produced statistically significant results (Figure 1).

Figure 1

These findings are particularly worrying in nutrition research, where the replication rate would certainly be lower than 39 percent. Indeed, the successful overthrow by journalist Gary Taubes of the long-dominant hypothesis that dietary fat causes heart disease, strokes, and obesity showed how an entire discipline could be captured by papers that would have an almost 0% replication rate.

How can science go so badly wrong? One answer is that there will always be bad apples in the barrel. Daniele Fanelli, who like Ioannidis is now at Stanford, showed in 2009 that about 2% of scientists will admit, in private, to having falsified data, while about a third will admit to “questionable research practices” such as omitting inconvenient data from their papers. But scientists know bad apples are not rare, and Fanelli found 14% of scientists knew of other scientists who had falsified data, while 70% of scientists knew of other scientists who had engaged in “questionable research practices.”

But if entire disciplines such as nutrition can go awry en bloc, then we’re not talking bad apples, for it seems that the very enterprise of research can deviate systematically from the paths of truth. What forces can push research that way?

One is funding from industry. Marion Nestle of New York University, author of Food Politics, has described how, on her first day editing the Surgeon General’s Report on Nutrition and Health, she was “given the rules: no matter what the research indicated, the report could not recommend ‘eat less meat’ … nor could it suggest restrictions on the intake of any other category of food.” The food producers, Nestle found, had Congress in their grip, and the politicians would simply block the publication of a report that threatened a commercial interest.

Research funded directly by industry and published in the peer-review literature is as suspect. David Ludwig of Harvard has shown studies that have been funded, at least in part, by drink manufacturers, are four to eight times more likely to report good news about commercial drinks than those that were funded independently — and no research paper funded wholly by the drink manufacturers reported any bad news.

Since scientific journals in some disciplines can be dominated by papers funded by industry — and since (not many people realize this) the great medical charities and foundations can be startlingly dependent on commercial funding — the food industry can ensure that little is published that would “suggest restrictions on the intake” of any category. And the little that does manage to get published can be swamped by the mass of commercially helpful papers. Other industries can monopolize their relevant scientific literature almost as exhaustively.

But government funding is just as distorting. Daniel Sarewitz of Arizona State University, in an essay entitled “Saving science,” found the model by which governments fund science is flawed, because the whole point of government funding is to isolate scientists from the real world and put them (cliché alert) into ivory towers. But, Sarewitz says, “It’s technology that keeps science honest” — i.e., only if scientists know their ideas will be tested against reality will they stay honest.

But too many government-funded scientists are not tested against reality. Rather, they are judged by their peers within the government funding agencies. So, if those agencies believe fat causes cardiovascular disease and obesity, they will preferentially award grants to researchers who select their findings to confirm the agencies’ paradigm. Those grantees will then get promoted until they too join the panels of the government granting agencies, whereupon the cycle of error will reinforce itself.

Max Planck once said science advances funeral by funeral as individual pieces of bad science die with their progenitors, but once an entire field has been infected with error, bad science can become self-perpetuating in a process Paul Smaldino and Richard McElreath described in a Royal Society journal as “The natural selection of bad science.”

Everything I’ve written here is well known (indeed, every statement comes from well-cited papers from the peer-reviewed literature), but I now want to make two personal statements that are safest made anonymously.

I did my Ph.D. in a world-famous research university, where I witnessed many examples of “questionable research practices,” such as omitting inconvenient data from published work. Initially, I was shocked, but not as shocked as I might have been, because as an undergraduate at a prestigious university, I’d already been taught how to cheat.

As undergraduates, we had to perform a host of laboratory “experiments” that were not experiments at all. They were laboratory exercises whose ultimate findings were predetermined, and the closer our reported results approached those predetermined findings, the better marks we received from our professors.

We undergraduates were obliged to attend demonstrations where a professor might, for example, inject an anaesthetized animal with adrenaline, whereupon the creature’s heart rate and blood pressure would rise. We’d then be provided with photocopies of the readings, which we’d tape into our lab books (this was some years ago) and which we’d write up to demonstrate our understanding of the basic physiological principles. But occasionally an animal wouldn’t respond as it was meant to, and its blood pressure might, say, fall on being injected with adrenaline. Whereupon the professor would circulate pre-prepared photocopies of experiments that had “worked,” which we were expected to write up as if the demonstration had gone to expectation.

So my exposure to questionable research practices as a Ph.D. student was no surprise: I’d long understood that was how science worked. Nonetheless, during my Ph.D. years, I learned to temper my own questionable research practices with common sense. If I was confident I knew how a drug worked, then I might tidy a graph for publication by removing the odd outlying data point, but I’d never “tidy” a graph whose message was actually obscure, because the person I’d be fooling would be myself.

And then I did a post-doc at a good, respectable university. And it was good and it was respectable, but it wasn’t stellar the way my Ph.D. university had been. And at the good and respectable university, I encountered a culture I’d never previously encountered in research, namely a culture of scrupulous honesty. Reader, it was hopeless. The construction of statistically significant graphs that during my Ph.D. years might have taken six weeks now took nine.

Lingering so long over the construction of those graphs didn’t speed the discovery of truth, but constructing them was like wading through treacle. It was all so slow and laborious. And that’s when I got my insight: At my stellar Ph.D. university, I’d learned that great scientists know which corners to cut (didn’t Einstein invent the cosmological constant to get out of an empirical hole?). But had I done my Ph.D. at the good and respectable university, I’d have learned that science was meticulous and scrupulous, and I’d have spent my career being scooped by competitors from stellar institutions. There is such a thing as the natural selection of career stagnation.

The other personal statement I’d like to make anonymously is that I’ve met many of the heroes of the reproducibility crisis (men such as Ioannidis, Nosek, and Fanelli), and they’re different from the usual run of researchers who lead big and successful labs. They’re not alpha males. Instead, the reproducibility heroes are quiet, modest, and thoughtful. Frankly, there’d be no place for them at the forefront of most scientific disciplines, which is perhaps why they’re quietly undermining the structure of an enterprise that might not otherwise be too welcoming.

Why Do Scientists Cheat?