In this 2011 editorial, S. Stanley Young and Alan Karr, of Bioinformatics and the National Institute of Statistical Sciences, respectively, present evidence that the results of observational trials (1) are more likely to be false than true.

The validity of observational evidence has been questioned since at least 1988, when L. C. Mayes et al. found that across 56 topic areas, an average of 2.4 studies could be found to support a particular observational claim alongside 2.3 studies that did not (2). In the case of the antidepressant reserpine, for example, three studies indicated the drug increased cancer risk, while eight did not.

More recently, an analysis of 49 claims from highly cited studies found five of six claims drawn from observational studies — 83% — failed to replicate (3). The authors of the analysis gathered 12 randomized controlled trials (RCTs), which collectively tested the claims of 52 observational trials. Zero of the 52 observational claims were supported by the subsequent RCTs, with the RCTs finding significant effects in the opposite direction of the observational evidence (i.e., an RCT indicated harm when the observational study indicated health, or vice versa) in five out of 52 claims. Young and Karr conclude:

There is now enough evidence to say what many have long thought: that any claim coming from an observational study is most likely to be wrong — wrong in the sense that it will not replicate if tested rigorously.

The authors cite W. Edwards Deming, who introduced the concept of quality control, to argue this scale of failure suggests the process by which observational studies are generated is fundamentally flawed. They specifically point to three factors that consistently lead to unreliable observational evidence:

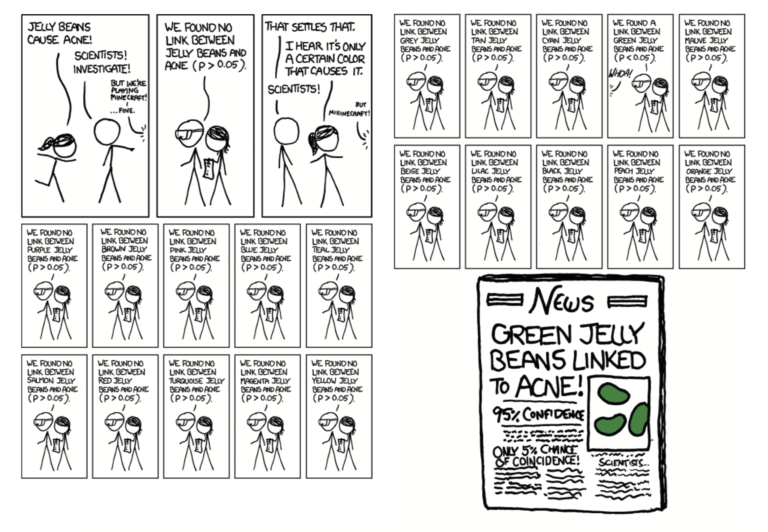

Multiple testing – A single observational study can test many associations simultaneously. For example, as mocked by the xkcd webcomic below, a single study can test for correlations between dozens of dietary and demographic inputs and clinical outcomes. Mere chance indicates some of these correlations will appear statistically significant, even if they lack any biological basis.

Bias – Data is often confounded by unobserved distortions. For example, the HIV drug abacavir was primarily given to patients at high risk of cardiovascular disease. Observational evidence concluded abacavir increased risk of heart disease when, in fact, abacavir was simply being given to patients who were more likely to have a heart attack, with or without the drug (4).

Multiple modeling – As with multiple testing, the regression models used to uncover correlations between variables in observational data can be manipulated until significant associations fall out. Here, the authors note the supposed harms of BPA were derived from an observational study that tested the urine of 1,000 people for 275 chemicals, then compared urine levels of these chemicals to 32 medical outcomes and 10 demographic variables. From this data set, 9 million possible regression models can be constructed, many of which will show significant associations as a result of chance alone (5).

These problems are exacerbated by the widespread reliance on p-values (i.e., statistical significance) to identify meaningful findings. Authors, journal editors, and those who read and interpret science treat statistically significant associations as meaningful and real, even when these significant associations may entirely result from the distortions above.

The authors propose a fix to this process that involves researchers splitting their data prior to analysis, analyzing part using a published statistical methodology, publishing the paper using only this data, and then analyzing the remaining data using the same methodology in an addendum. This method provides a sort of internal replication: If the addendum data informs the same conclusions as the main body of data, these conclusions are more likely to be valid, and if not, the authors’ analytical methods can be questioned.

The takeaway: This paper highlights the extreme degree to which observational evidence is unreliable. Based on the data these authors present, observational evidence is no more likely to be right than wrong, and in fact, it may be more likely to be wrong than right. In other words, any health claim based on observational evidence is only valuable according to the extent to which it informs subsequent controlled trials to test its validity.