In a two-part series for The New York Review of Books, previously covered on CrossFit.com, Marcia Angell analyzes the role the pharmaceutical industry has played in redefining psychiatric disorders to “increase drug company sales, to enhance the income and status of the psychiatry profession, and to get insurance coverage or disability benefits for troubled families.”

The series drew the interest of several members of the psychiatric community and generated responses ranging from criticism to support.

In “‘The Illusions of Psychiatry’: An Exchange,” also published in The New York Review of Books, four Angell critics argue she understates the benefits that the use of psychoactive drugs offers to patients suffering from mental disorders. John Oldman, President of the American Psychiatric Association, argues that even though we lack a complete understanding of the strict chemical basis of mental disorders, the apparent effectiveness of psychiatric medications supports their use as treatment. The remaining critics (Richard A. Friedman, Andrew A. Nierenberg, and Daniel Carlat, all MDs and professors of psychiatry) similarly argue that even if the benefits of these drugs have been overstated by pharmaceutical companies, those benefits remain sufficient to justify their use. They claim psychiatry’s symptomatic drug treatments is similar to that practiced in other medical fields, and accuse Angell of “downplaying the consequences of untreated psychiatric illness.”

In the published dialogue, Angell finds her critics’ replies unconvincing, and reiterates that the studies used to support psychoactive drugs present a biased, industry-sponsored dataset that fails to meet regulatory standards for clinical significance. These problems with the scientific literature are compounded by the fact that diagnosis and treatment of many mental illnesses is based on subjective symptom assessment, not clear biological signs, making them particularly susceptible to bias in both research and practice.

Angell writes,

Both the pharmaceutical industry and the psychiatry profession have strong financial interests in convincing the public that drug treatment is safe and the most effective treatment for mental illnesses, and they also have an interest in expanding the definitions of mental illness.

She concludes, “It is no favor to desperate and vulnerable patients to treat them with drugs that have serious side effects unless it is clear that the benefits outweigh the harms.”

Angell’s argument that the trials supporting psychoactive drugs are clouded by bias is corroborated by two separate reviews of the scientific literature.

In “Selective Publication of Antidepressant Trials and Its Influence on Apparent Efficacy” (2008), Erick Turner et al. assess evidence of publication bias in the psychiatric drug literature. Via a review of premarketing drug data (including unpublished data), the authors observe a bias toward publishing trials with positive outcomes that support the use of drugs.

They found 94% of published antidepressant trials showed positive results. However, using FDA approval databases, they discovered an additional 23 unpublished trials, only one of which had positive results (and 16 of which showed negative results). In this combined body of literature, only 51% of trials showed a positive outcome, and the mean effect size was smaller. In other words, this investigation showed that the published literature on antidepressants was an inaccurate representation of the literature as a whole and a significant share of negative trials were left unpublished.

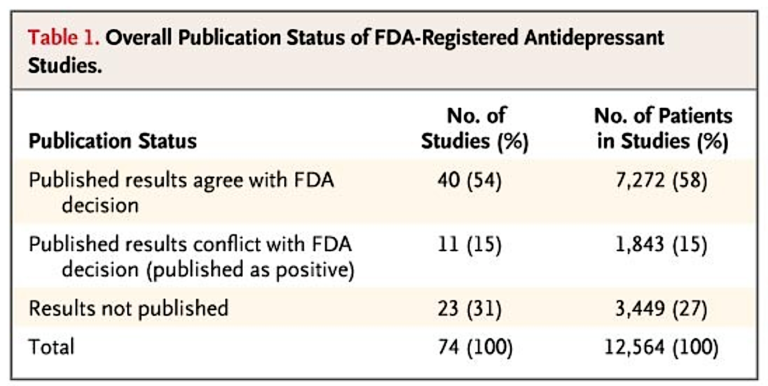

In a further breakdown of these trials and their publication, the authors note that 23 out of 74 trials testing antidepressants were unpublished. Forty-eight out of 51 published trials were reported as having positive results, and three indicated negative results. According to the FDA’s own assessment, 11 of these positive trials either failed to meet the FDA’s methodological standards or in fact showed a negative effect. Among 23 unpublished trials, one was positive, six had questionable results, and 16 had negative results. Additionally, the mean effect size in unpublished trials was less than half that in the published literature (0.15 vs. 0.37), and the mean effect size when all trials were assessed in aggregate was 32% smaller than in published studies alone (0.31 vs. 0.41).

Table 1

To quote the authors:

According to the published literature, the results of nearly all of the trials of antidepressants were positive. In contrast, FDA analysis of the trial data showed that roughly half of the trials had positive results. The statistical significance of a study’s results was strongly associated with whether and how they were reported, and the association was independent of sample size. The study outcome also affected the chances that the data from a participant would be published. As a result of selective reporting, the published literature conveyed an effect size nearly one third larger than the effect size derived from the FDA data.

Similarly, in “Publication Bias in Antipsychotic Trials: An Analysis of Efficacy Comparing the Published Literature to the US Food and Drug Administration Database” (2012), Turner et al. run an analysis of the scientific literature on antipsychotics. Twenty-four trials were registered with the FDA, 20 of which were published. Fifteen out of 20 published trials showed positive effects, compared with one out of four unpublished trials.

As with the previous results, the mean effect size in unpublished trials was half as large as that seen in published trials (0.23 vs. 0.47). Overall, evidence of publication bias was weaker in antipsychotics than in antidepressants, which the authors hypothesize may be linked to the greater effectiveness antipsychotics have shown on average across the literature.

Taken together, these articles support Angell’s argument that publication bias clouds our ability to understand the true clinical effectiveness of psychiatric drugs, though the magnitude of publication bias may vary between drug classes.

Comments on Psychiatry and Pharma: An Exchange and Additional Reading

This is information we should all have. As we see advertisement all the time that one in 7 with at one point have to deal with depression or mental illness. It is always good to remember that pills are not magical. They have some good and bad effects and we should know how much good for how much bad before to use it.

Psychiatry and Pharma: An Exchange and Additional Reading

16