Based in part on the study types identified by Bruce Reider (6).

Anecdotes: Their Power and Limitations

As presented above, the simplest form of evidence is known as an anecdote, wherein an individual expresses his or her opinion of what is the “truth.” A typical example might be a statement such as: “The reason why I ran so well was because I drank so much (or because I did not drink at all) during today’s marathon.”

Of course, either proposition might be correct, but one person’s opinion that this is the case is not sufficient evidence that this opinion is in fact a “truth” that can be generalized to all other humans in all possible circumstances.

However, each person’s experience or “truth” is intensely powerful and will determine how each interprets and responds to the world. In a real sense, we are each an “experiment of one.”

Thus, the athlete who believes that drinking during exercise improves her performance will perform better when she drinks and less well if she is denied access to fluids during exercise. This will happen regardless of whether the value of drinking has been established beyond doubt in properly controlled scientific studies that fulfill all the criteria listed in Table 1.

The key to the response is that the outcome usually will be that which the athlete believes it will be — the classic placebo effect (7).

This placebo effect is immensely powerful, even if it remains poorly understood. I would say it is so powerful that if one does not “believe” in an outcome then it becomes increasingly unlikely that that outcome ever will eventualize. I was privileged to present a lecture on belief and sporting outcomes at the 2017 CrossFit Games Scientific Symposium in Madison, Wisconsin (8).

Much of scientific inquiry begins with an anecdote. My personal interest in the possible dangers of over-drinking during exercise — and thus the origins of my book Waterlogged (9) and these columns — stemmed from the letter I received in June 1981 (10), which described the world’s first case (11) of what would become known as Exercise-Associated Hyponatremic Encephalopathy (EAHE).

This form of anecdote is known as a case report. A series of anecdotes can form a case series (11). A case series can produce a powerful indication of what might be the truth, but it cannot establish “truth,” as each case is merely an untested anecdotal observation. To begin the journey toward truth, a superior experimental design is needed to test any hypotheses (theories) the case series suggests.

Claude Bernard, known as the father of the modern experimental physiology, described the phenomenon thus: “To reason experimentally, we must first have an idea and afterwards induce or produce facts, i.e., observations to control our preconceived idea” (12).

When faced with an anecdote, the true scientist asks: What hypothesis does this anecdote suggest, and is there any published scientific evidence that tests that hypothesis? If there is none, the scientist can design an appropriate experiment of the appropriate grading that will begin the scientific process.

The next level of evidence comes from cross-sectional or observational studies. In this study design, a snapshot is taken at one moment of a particular phenomenon, and then associations are sought between some or all the data collected in the course of the experiment.

The Wyndham and Strydom study is a classic example of a cross-sectional, observational study in which no attempt was made to control any variable other than the distance the athletes ran.

As an example, these races were run in 1968 before the introduction of accurate measurement of running distances. Thus, one would predict that the race distance was not actually 21.5 miles as the authors reported but could have been any distance from 19 to 21 miles, for instance. If the three races were not run on exactly the same course, then it is highly improbable that both would have been the same distance.

In Wyndham and Strydom’s observational studies (not “experiments” since there was no control group), the runners ran a set distance at their own chosen paces in uncontrolled environmental conditions when drinking at their own freely chosen rates. The attraction of such observational studies (as I well know because I kicked off my scientific career doing such studies (13)) is that they are highly cost-effective. This is because the athletes are studied all at the same time whilst doing that which they would have done anyway, which provides a cheap, quick, and highly effective method to make observations from which an initial hypothesis can be developed. That hypothesis then can be tested by the much more robust experimental method: the randomized, controlled, prospective clinical trial (RCT).

Wyndham and Strydom’s error, shared by all who became rather slavish devotees of the particular “truth” they believed Wyndham and Strydom had discovered, was to miss a vital step on the road to scientific truth. They had allowed the establishment of a “truth” from an observational study. But observational studies can only ever generate hypotheses, not definitive truths. And because this hypothesis would prove to be wrong, what these researchers ultimately established was nothing more than a “foundation myth.”

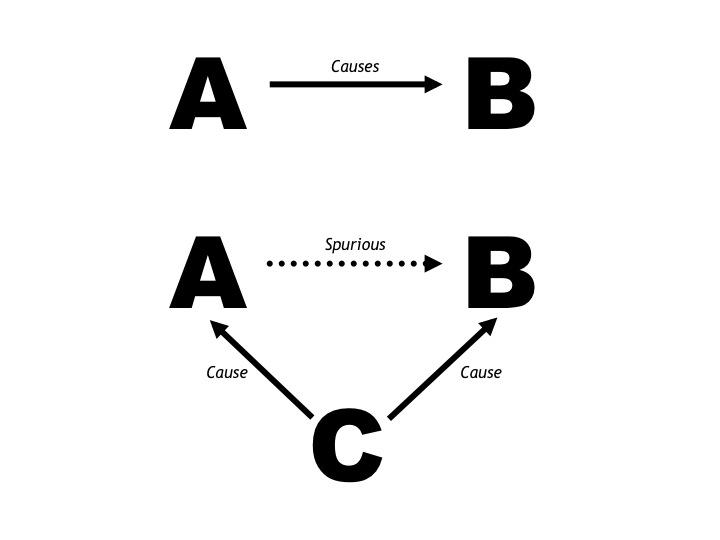

The key weakness in Wyndham and Strydom’s study was that they failed to control for the third factor, metabolic rate, which determines both the rectal temperature and the sweat rate during exercise.

Thus, the most likely explanation for Wyndham and Strydom’s finding of a linear (assumed causal) relationship between the post-race rectal temperature and the runners’ levels of dehydration (discussed in the previous post in this series (14)) is simply that both were determined by how fast the athletes ran in the different races. Those who ran the fastest also sweated the most and hence lost the most weight. But because they ran the fastest, they also sustained the highest metabolic rates (rates of oxygen consumption, or VO2). And since VO2 determines the rectal temperature during exercise, the fastest, heaviest sweating runners who lost the most weight during the races also had the highest post-race rectal temperatures.

So, for Wyndham and Strydom (or anyone else) to establish their hypothesis as the “truth” requires that it be subjected to the scrutiny of an RCT. Indeed, it is the development of the RCT that differentiates the sciences from the arts and is the cornerstone of the modern medical sciences (even though RCTs are not without their own problems).

The goal of an RCT is to control all possible influences, including the psychological, with the sole exception of the single variable that the scientist believes determines the outcome measurement. That single variable is then allowed to vary in a controlled way in a series of experiments. In this way, the influence of many different factors can be evaluated one at a time in consecutive experiments in a systematic manner. In short, the focus of the scientific method is to undertake experiments in each of which only one variable is allowed to change in a controlled manner. The scientist then measures any effect that changes in that specific variable may have on the key outcome measurement.

Wyndham and Strydom sought to determine a relationship between the outcome measurement — the post-exercise rectal temperature and the risk of heat stroke — and the controlled variable, which was the extent to which the athletes ingested fluid during the 21.5-mile races. To answer this question in the population they chose, only the following experimental design would have been appropriate:

- The environmental conditions would need to be identical during all races or the researchers would need to prove that any variation in environmental conditions was too small to have influenced their conclusions. Alternatively, they could have compared the effects of drinking different volumes of fluid in either hot or cold environments.

- The physical state of the runners would need to be identical at the start of each race, especially their state of training before each race. In addition, none of the athletes should have shown any evidence of medical illnesses before any of the trials.

- The runners would need to run each race at exactly the same pace. That is, their speeds over the different sections of the course and especially over the last 4 miles of the race would need to be identical under all experimental conditions. (This is because the post-race rectal temperature is determined by the running speed maintained over the last 4 miles of the race.)

- The course over which the races were run would need to be identical.

In experiments in which these four factors were rigorously controlled, the authors then would need to alter the one controlled variable of their study (which in our proposed study would have been the rate at which the runners ingested fluid during the race). Since Wyndham and Strydom believed that only weight losses in excess of 3% were likely to be detrimental, they would need to design experiments in which different rates of fluid intake produced, for example, the following range of weight losses during their 21.5-mile races: 0%, -3%, -4.5%, and -6%.

To do this, they first would have needed all the athletes to run the race at their chosen (but predetermined) running paces in the chosen environmental conditions whilst they drank as they normally did. In effect, this was the study that Wyndham and Strydom reported.

Once they knew how much each athlete sweated when running at his chosen marathon pace in these environmental conditions, they then could calculate how much each would need to drink to finish the 21.5-mile races on the same course under identical environmental conditions with levels of weight loss of 0%, -3%, -4.5%, and -6%.

So, if their experiments had been designed properly, the study that Wyndham and Strydom actually reported would have been merely a preliminary study to provide essential information for the design of all the subsequent experiments.

Then, over the next few months, whilst the athletes maintained their same levels of fitness and general health, they would then complete those races according to a randomized design. This means that in each race there would be equal numbers of runners who were drinking according to a regimen that would produce water deficits of 0, -3%, -4.5%, and -6%. However, the allocation of exactly who drank at what rate during which race would have been decided by random allocation even before the first experimental trial was conducted.

Furthermore, the athletes would be informed of their drinking regimen for each race only immediately before they began that race. This would limit the psychological (placebo) effects that would occur when the athletes were told what they were to drink during the race.

Importantly, the authors would not be able to express any opinions of which drinking protocol(s) they believed were likely to be optimal since that also might influence the study outcomes.

Note that in our proposed study, the speeds at which the athletes ran would not be an outcome measure. In other words, the study was not designed to determine the effects of different drinking patterns on how fast athletes ran. Instead it was controlled to ensure that running speeds and hence metabolic rates (VO2) were controlled in each athlete in all experiments.

But had the speed at which the athletes finished the race been an outcome measure, then ideally the study could not be funded by any organization that had a commercial interest in that outcome — or already had expressed an opinion of what it expected that outcome to be.

Nor could the study be conducted by scientists who had expressed any opinions on what they expected the outcome of the study to be. For athletes who know what is expected of them during the experiment will do just that: They will do exactly what the researchers expect of them.

Finally, our RCT study would involve perhaps 64 runners since there are 64 possible combinations in which the four different drinking patterns could be arranged. This number also would increase the probability that any effects of fluid ingestion would be measurable.

In this way, the possible effects of some environmental factor about which we know nothing and which might affect the results of only one or perhaps two of the experimental races would still have an equivalent effect on all four drinking regimens, since all would be represented equally in each experimental trial.

The point, of course, is that Wyndham and Strydom did not schedule the five 21.5-mile races that would have been necessary to prove the “antipyretic” effect of water drinking during running.

As a result, they could not exclude that at least one other factor, in particular the rate of energy use or VO2, was the real reason why they uncovered a spurious relationship between body-weight loss during those races and the post-race rectal temperature.

To summarize: An alternate explanation for an apparently causal relationship between dehydration and hyperthermia is that the faster the runners ran in that study, the higher were their metabolic rates (VO2), and as a consequence, their body temperatures, sweat rates, and levels of dehydration. For the speed at which the fastest runners ran would have produced the highest VO2, which in turn would have generated the highest body temperatures and the highest sweat rates in those runners. Since all runners drank sparingly during those races, as was the custom at that time, those who ran the fastest and sweated the most whilst drinking little also would have lost the most weight and been the most “dehydrated” at the finish.

There is one final factor to consider. In the 1960s, only well-trained athletes with reasonable athletic ability competed in marathon races. Most of these runners would have been of quite similar weights, probably between 55 and 70 kg (121 and 154 pounds). Since the VO2 during running is determined by running speed and body mass, the relatively similar body weights of all these runners (compared to large differences in modern marathoners) would have meant the major determinant of differences in VO2 between the runners in these races would have been differences in their running speeds.

Relevance to Nutrition Science

The scientific basis for most of what is taught in the modern nutrition sciences is cross-sectional observational studies, also known as epidemiological studies, which (like Wyndham and Strydom’s study) cannot prove causation except in certain very exceptional circumstances.

It is perhaps not a complete surprise to readers of this series that I have been involved in a 4½-year trial of my professional conduct for suggesting dietary advice that was not considered “conventional” or “evidence-based.” In the end, as described in Marika Sboros’ and my book, Lore of Nutrition (20), I was found innocent of all charges.

What I learned in the process is that most of the ideas that are taught in the nutrition sciences as indisputably true are in fact no better than “foundation myths” because they are based on observational studies that cannot prove causation. The greatest critic of the nutrition sciences is one of the most respected and reputable scientists of modern times, Dr. John Ioannidis, MD, Professor of Medicine at Stanford University (21-22). To say that his status in the profession is close to genius is not an overstatement.

In essence, his message is that as far as nutrition research is concerned, we need to begin all over again. Recently, in an interview with CBC News, Ioannidis said, “Nutritional epidemiology is a scandal. It should just go to the waste bin.” No doubt we will return to this topic frequently in the future.

A Final Word on Meta-Analyses

Table 1 lists meta-analysis as the highest form of evidence. But this clearly depends on the quality of the studies that make up any particular meta-analysis, as well as the independence of the persons conducting the meta-analysis.

Ioannidis notes that the production of meta-analysis has become a major growth industry in modern science: “Currently there is a massive production of unnecessary, misleading, and conflicted systematic reviews and meta-analyses. Instead of promoting evidence-based medicine and health care, these instruments often serve mostly as easily produced publishable units or marketing tools. Suboptimal systematic reviews and meta-analyses can be harmful” (23). He concludes that only 3% of all meta-analyses are clinically useful.

So, How Do You Judge Which Science Is Likely to Be True?

Consider the following principles:

- Consider all the evidence. The evidence you either don’t know about or choose to ignore will come back to haunt you. We have a saying that everyone is entitled to his or her opinion. But no one is entitled to his or her own set of facts.

- The most important evidence is not that which supports your biases. The most important evidence is that which disagrees with what you believe. A single credible study (done by reputable scientists who do not have an agenda) that refutes your ideas is more important information for you than hundreds of studies that apparently support your biases.

- If a new idea conflicts with what is generally accepted as a biological truth, it is probably wrong unless it is presented together with a body of new and credible evidence that disproves those previously accepted biological “truths.” Biology always rules (See previously cited quote of John Le Fanu (4)).

Additional Reading

- The Hyponatremia of Exercise, Part 1

- The Hyponatremia of Exercise, Part 2

- The Hyponatremia of Exercise, Part 4

- The Hyponatremia of Exercise, Part 5

- The Hyponatremia of Exercise, Part 6

- The Hyponatremia of Exercise, Part 7

- The Hyponatremia of Exercise, Part 8

- The Hyponatremia of Exercise, Part 9

- The Hyponatremia of Exercise, Part 10

- The Hyponatremia of Exercise, Part 11

- The Hyponatremia of Exercise, Part 12

Professor T.D. Noakes (OMS, MBChB, MD, D.Sc., Ph.D.[hc], FACSM, [hon] FFSEM UK, [hon] FFSEM Ire) studied at the University of Cape Town (UCT), obtaining a MBChB degree and an MD and DSc (Med) in Exercise Science. He is now an Emeritus Professor at UCT, following his retirement from the Research Unit of Exercise Science and Sports Medicine. In 1995, he was a co-founder of the now-prestigious Sports Science Institute of South Africa (SSISA). He has been rated an A1 scientist by the National Research Foundation of SA (NRF) for a second five-year term. In 2008, he received the Order of Mapungubwe, Silver, from the President of South Africa for his “excellent contribution in the field of sports and the science of physical exercise.”

Noakes has published more than 750 scientific books and articles. He has been cited more than 16,000 times in scientific literature and has an H-index of 71. He has won numerous awards over the years and made himself available on many editorial boards. He has authored many books, including Lore of Running (4th Edition), considered to be the “bible” for runners; his autobiography, Challenging Beliefs: Memoirs of a Career; Waterlogged: The Serious Problem of Overhydration in Endurance Sports (in 2012); and The Real Meal Revolution (in 2013).

Following the publication of the best-selling The Real Meal Revolution, he founded The Noakes Foundation, the focus of which is to support high quality research of the low-carbohydrate, high-fat diet, especially for those with insulin resistance.

He is highly acclaimed in his field and, at age 67, still is physically active, taking part in races up to 21 km as well as regular CrossFit training.

References

- Wyndham CH, Strydom NB. The danger of an inadequate water intake during marathon running. S. Afr. Med. J 43(1969): 893-896.

- American College of Sports Medicine. Position stand on the prevention of thermal injuries during distance running. Med. Sci. Sports Exerc. 19(1987): 529-533.

- Convertino VA, Armstrong LE, Coyle EF, et al. American College of Sports Medicine position stand: Exercise and fluid replacement. Med. Sci. Sports Exer. 28(1996): i-vii; Armstrong LE, Epstein Y, Greenleaf JE, et al. American College of Sports Medicine position stand. Heat and cold illnesses during distance running. Med. Sci. Sports Exerc. 28(1996): i-x.

- Le Fanu J. The Rise and Fall of Modern Medicine. New York, NY: Basic Books, 2012.

- Sowell, T. The Vision of the Anointed: Self-Congratulation as a Basis for Social Policy. New York, NY: Basic Books, 1996.

- Reider B. Read early and often. Am. J. Sports Med. 33(2005): 21-22.

- Breedie CJ. Placebo effects in competitive sport. Qualitative data. J. Sports Sci. Med. 6(2007): 21-28.

- Noakes TD. Character, self-belief, and the search for perfection. Lecture delivered at the 2017 CrossFit Games Science. Symposium. August 2017. Madison, WN.

- Noakes TD. Waterlogged: The serious problem of overhydration in endurance sports. Champaign, IL: Human Kinetics, 2012.

- Noakes TD. Comrades makes medical history—again. S.A. Runner. 4(September 1981): 8-10.

- Noakes TD, Goodwin N, Rayner BL, et al. Water intoxication: A possible complication during endurance exercise. Med. Sci. Sports Exerc. 17(1985): 370-375.

- Bernard C. An Introduction to the Study of Experimental Medicine. London. Mineola, NY: Dover Publications, 1957. 12.

- Noakes TD, Carter JW. Biochemical parameters in runners before after having run 160km. S. Afr. Med. J. 50(1976): 1562-1566.

- Noakes TD. The hyponatremia of exercise, part 1. CrossFit.com. Feb. 28, 2019. Available here.

- Breedie CJ, Stuart EM, Coleman DA, et al. Placebo effects of caffeine on cycling performance. Med. Sci. Sports Exerc. 38(2006): 2159-2164.

- Breedie CJ, Coleman DA, Foad AJ. Positive and negative placebo effects resulting from the deceptive administration of an ergogenic aid. Int. J. Sports Nutr. Exerc. Metab. 17(2007): 259-269.

- Benedetti F, Pollo A, Colloca L. Opioid-mediated placebo responses boost pain, endurance and physical performance: Is it doping in sport competitions? J. Neurosci. 27(2007): 11934-11939.

- Clark VR, Hopkins WG, Hawley JA, et al. Placebo effect of carbohydrate feedings during a 40-km cycling time trial. Med. Sci. Sports Exerc. 32(2000): 1642-1647.

- Budiansky S. The nature of horses. Exploring equine evolution, intelligence and behaviour. New York, NY: Free Press, 1977.

- Noakes TD, Sboros M. Lore of Nutrition. Challenging Conventional Dietary Beliefs. Cape Town, South Africa: Penguin Books, 2018.

- Ioannidis JPA. We need more randomized trials in nutrition—preferably large, long-term, and with negative results We need more randomized trials in nutrition—preferably large, long-term, and with negative results. Am. J. Clin. Nutr. 103(2016):1385–6.

- Ioannidis JPA. The challenge of reforming nutritional epidemiologic research. JAMA. Published online August 23, 2018.

- Ioannidis JPA. The mass production of redundant, misleading, and conflicted systematic reviews and meta-analyses. Milbank Quarterly 94(2016): 485-514.

- Ioannidis JPA. Evidence-based medicine has been highjacked. A report to David Sackett. J. Clin. Epidemiol. 73(2016): 82-86.