David Diamond and Uffe Ravnskov argue the widely described benefits of statins are largely attributable to the strategic use of statistical techniques to simultaneously increase the perceived benefits and minimize the perceived risks.

These biases are primarily introduced through the use of absolute and relative risk ratios. The former shows the percentage of the population that (based on comparison to the control group) was saved from an adverse event by a treatment (or that experienced a side effect due to treatment), while the latter is the ratio between the affected shares of each population.

The authors provide the example of a theoretical 2,000-person, five-year statin study in which 2 percent of subjects in the placebo group and 1 percent of subjects in the treatment group experience a heart attack. The absolute risk ratio indicates a risk reduction of 1 percent (i.e., 99 percent of subjects in the treatment group did not experience a heart attack over five years, and we would expect that 98 percent of subjects would have without treatment).

To use an alternative measure, the number needed to treat (NNT) indicates that if 100 subjects were given statins, one person who would have had a heart attack over a five-year period would not, due to the effects of the drug. The relative risk ratio, conversely, would be 50 percent, because 1 percent (the share of the population affected in the treatment group) is 50 percent of 2 percent (the share affected in the control group). Broadly, the authors show how consistent use of relative risk ratios for trial benefits and absolute risk ratios for (outright suppression of) side effects has led statins to be seen as more beneficial than the data suggest they ought to be.

Diamond and Ravnskov outline a series of trials as specific examples. The JUPITER trial, which tested rosuvastatin in 17,802 healthy people (half randomized to control), found that 68 controls (0.76 percent) and 31 treated subjects (0.35 percent) experienced a fatal or nonfatal heart attack. This indicates an absolute risk reduction of 0.41 percent (an NNT of 244), but this study was widely reported as evidence that “more than 50 percent avoided a fatal heart attack” based on the relative risk ratio. The study was subsequently noted for its “spectacular” benefits.

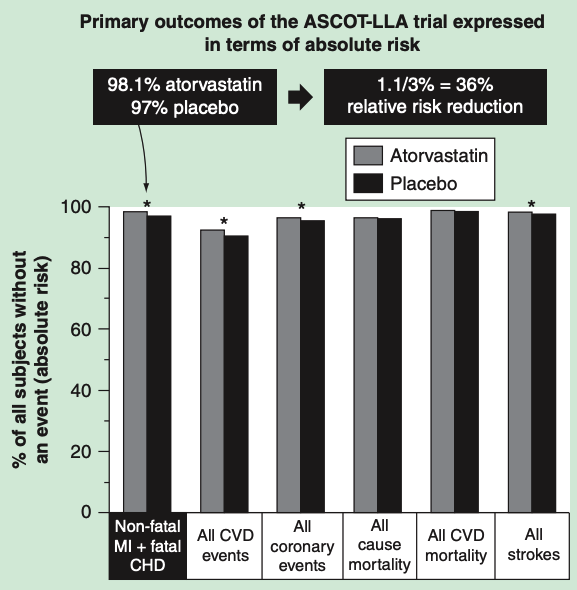

The Anglo-Scandinavian Cardiac Outcomes Trial—Lipid-Lowering Arm (ASCOT-LLA) randomized 10,305 hypertensive individuals to atorvastatin or placebo. The trial was stopped after 3.3 years because it met its primary endpoint—3 percent in the placebo group suffered a heart attack compared to 1.9 percent in the treatment group. There was no effect on cardiovascular mortality, and no benefit in women or patients younger than 60. Yet this 1.1 percent absolute risk reduction was reported as a 36 percent reduction in heart-attack risk—a claim subsequently used in Lipitor ads.

Data from the ASCOTT-LLA trial representing the percent of patients without clinical events in the atorvastatin arm (grey) and in the placebo arm (black). *Statistically significant group differences based on relative risk reduction. From “How statistical deception created the appearance that statins are safe and effective in primary and secondary prevention of cardiovascular disease.“

The British Heart Protection Study randomized 20,000 adults with cardiovascular disease (CVD) and/or diabetes to simvastatin or placebo for five years. Described as the “world’s largest cholesterol-lowering trial,” it found that 7.6 percent of patients died because of CVD in the treatment group and 9.1 percent in the placebo group—an absolute risk reduction of 1.5 percent but a relative risk reduction of 18 percent.

Diamond and Ravnskov note the logic often used to support extrapolation from these sorts of results: Given the enormous number of Americans affected by heart disease, a small impact applied to a very large population still could save thousands of lives each year. This logic assumes, however, that these cholesterol-lowering interventions are not increasing risk for any other conditions—because if we give a drug to millions of Americans, we scale up both the benefits and any damage it causes.

The authors provide evidence suggesting statins are not, in fact, harmless. In JUPITER, more subjects in the treatment group became diabetic. (Here, the fact that 3 percent of patients in the treatment group became newly diabetic compared to 2.4 percent of controls was reported as a 0.6 percent absolute risk increase—rather than a 25 percent increase in risk of diabetes.)

In the CARE trial, a secondary prevention trial using pravastatin, 12 women in the treatment group developed breast cancer over five years, compared to one in the control group. This significant difference was dismissed because the authors did not believe there was a mechanism by which pravastatin could increase cancer risk, a belief Diamond and Ravnskov discredit.

PROSPER, another pravastatin trial, similarly showed a significant increase in cancer mortality in the treatment group.

SEAS, a trial of simvastatin and ezetimibe, also showed a significant increase in cancer appearance in the treatment group. Diamond and Ravnskov suggest that if this effect is real, longer statin trials may show an even more dramatic effect; one case-control study of women taking statins for 10 years showed a doubling of ductal and lobular breast-cancer risk.

Finally, a variety of other evidence is presented suggesting statins have been linked to myopathy, central-nervous-system pathology, and mood and cognitive disorders.

Ravnskov and Diamond conclude:

“We have documented that the presentation of statin trial findings can be characterized as a deceptive strategy in which negligible benefits of statin treatment have been amplified with the use of relative risk statistics, and that serious adverse effects are either ignored or explained away as … chance occurrences. Moreover, the authors of these studies have presented the rate of adverse events in terms of absolute risk, which, compared to relative risk, minimizes the appearance of their magnitudes.”

Comments on How Statistical Deception Created the Appearance That Statins Are Safe and Effective in Primary and Secondary Prevention of Cardiovascular Disease

This is like they make magic. The study doesn't lie but makes us believe it is much more impressive result than it is. Relative v.s. absolute is often overlooked. Thanks for this article.

How Statistical Deception Created the Appearance That Statins Are Safe and Effective in Primary and Secondary Prevention of Cardiovascular Disease

1